Time and space complexity

Want to improve your code efficiency? Want to know much your code is taking up space? Learn about time and space complexity.

Time and space complexity are very important concepts when it comes to programming. Even being an important topic, it is often ignored by many of the programmers. By knowing time and space complexity, you can optimize your code and make it more efficient. You can reduce your memory space with the help of space complexity.

Is time and space complexity a tricky subject? Not at all. Is it all about mathematics? Not exactly. Sure, it involves a bit of math but not all of it is made up of math. Is this blog going to make your life as a coder better? Of course, yeah. In this blog, you will be learning about time complexity and space complexity.

What is Time complexity?

Let us consider two computers. One computer is old and another one is brand new. Both computers are executing the linear search algorithm to search for the number “10” in an array comprising a million of numbers. The old computer takes 10s to complete this task and the new one takes only 1s. Which computer has better time complexity?

If your answer turns out to be the new one, you are wrong. If your answer turns out to old one, you are wrong again. If your answer is “both have the same time complexity”, BRAVO! You are right! The major misconception about time complexity is that time complexity is equal to the time taken to execute the program. This is not true at all.

Time complexity is nothing but a function that gives us the relationship between time and input. In simple terms, it tells you how the input grows with respect to time. It is the same irrespective of how old the computer might be.

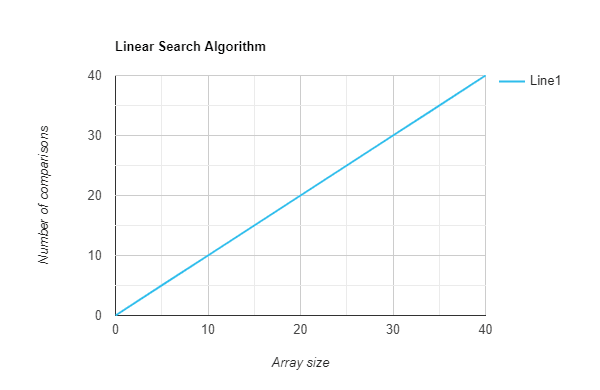

To make things clear, look at the below graph. Both computers execute the codes in the same linear manner. The only difference is their slop. But when it comes to time complexity, we need not consider the slope rather we look into the relationship of both variables.

Why do we need to know how time complexity improves our code?

As told in the introduction, time complexity improves the efficiency of your code. Furthermore, here you will know the depth of it.

Let us take three algorithms with time complexity O(N), O(log N) and O(1). Using time complexity, we will be knowing which algorithm is more efficient. Look at the graph below for further understanding.

When you analyze the graph with the respect to a large number of input data, you will know that the amount of O(1) is lesser than O( log N) and O(N) is lesser than O(1). You might be wondering why did they take large input data, in computer science we often deal with large amounts of data. If we consider less input data, you will know that O(N) is better when compared to O(1) but in the real case, that amount of data is not possible.

Things that you need to keep in mind while dealing with time complexity.

- Look for worst complexity

Look at complexity for large data.

Ignore all the constants.

Ignore less dominating terms.

Important terms

Big O notation

Big O notation denotes the upper bound. It is the maximum that the time complexity of the particular algorithm can have.

Example:

The worst time complexity of bubble sort algorithm is O(N2). The time complexity of bubble sort doesn’t go above O(N2).

The mathematical format is:

lim f(n)/g(n) < ∞

n→∞

Big Omega notation

Big Omega is the opposite of Big O notation. Big Omega notation denotes the lower bound. It is the minimum that the time complexity of the particular algorithm can have.

Example:

The best time complexity of bubble sort algorithm is Ω (N). The time complexity of bubble sort doesn’t go below Ω (N).

The mathematical format is:

lim f(n)/g(n) > 0

n→∞

Theta notation

Let's say you have an algorithm which has O(N) as the upper bound and Ω(N) as the lower bound. How will you represent the notation of this then?

We will use theta notation to denote some situations. Like an algorithm with O(N) and Ω(N) can be denoted as Θ(N)

The mathematical format is:

0 < lim f(n)/g(n) < ∞

n→∞

Little o notation

It is similar to Big o notation. The little o notation is a stronger statement than the Big O notation. It is strictly lower.

For example, if f = o(g). This means, f < g.

The mathematical format is:

lim f(n)/g(n) = 0

n→∞

Little omega notation

It is similar to Big omega notation. The little omega notation is stronger statement than the Big omega notation. It is strictly greater.

For example, if f = w(g). This means, f > g.

The mathematical format is:

lim f(n)/g(n) = ∞

n→∞

Space Complexity

Space complexity is much easier than time complexity. It is defined as the total space taken with respect to the input size. The space complexity is the combination of the auxiliary space and input space.

If you take an example of Insertion sort, the sorting algorithm uses O(1) auxiliary space. But the space complexity of the Insertion sort is O(n).

Space Complexity of Recursive Algorithms:

From the above recursion tree, only the function calls that are interlinked with each will be on the stack at the same time.

So, the space complexity will be the Height of the tree.

Let's take an example of a program that calculates the sum of N natural numbers.

int sum(int n)

{

int i,sum=0;

for(i=1;i<=n;i++)

sum=sum+i

return sum;

}

In the above example, the input value 'n' takes up space of O(1) because it is constant. The auxiliary spaces are also O(1) because 'sum' and 'i' are both constants.

The total space complexity is O(1).

If this blog turns out to be helpful, make sure you follow me on Twitter where I tweet valuable tweets which will improve your programming journey.